Tim Gardner, TweetyBERT: Uncovering Canary Song Syntax

March 9 2024, 16:00 BST/ 15:00 GMT/ 10:00 EST/ 07:00 PST (4pm BST/ 3pm GMT / 10am EST/ 7am PST)

TweetyBERT: Uncovering Canary Song Syntax

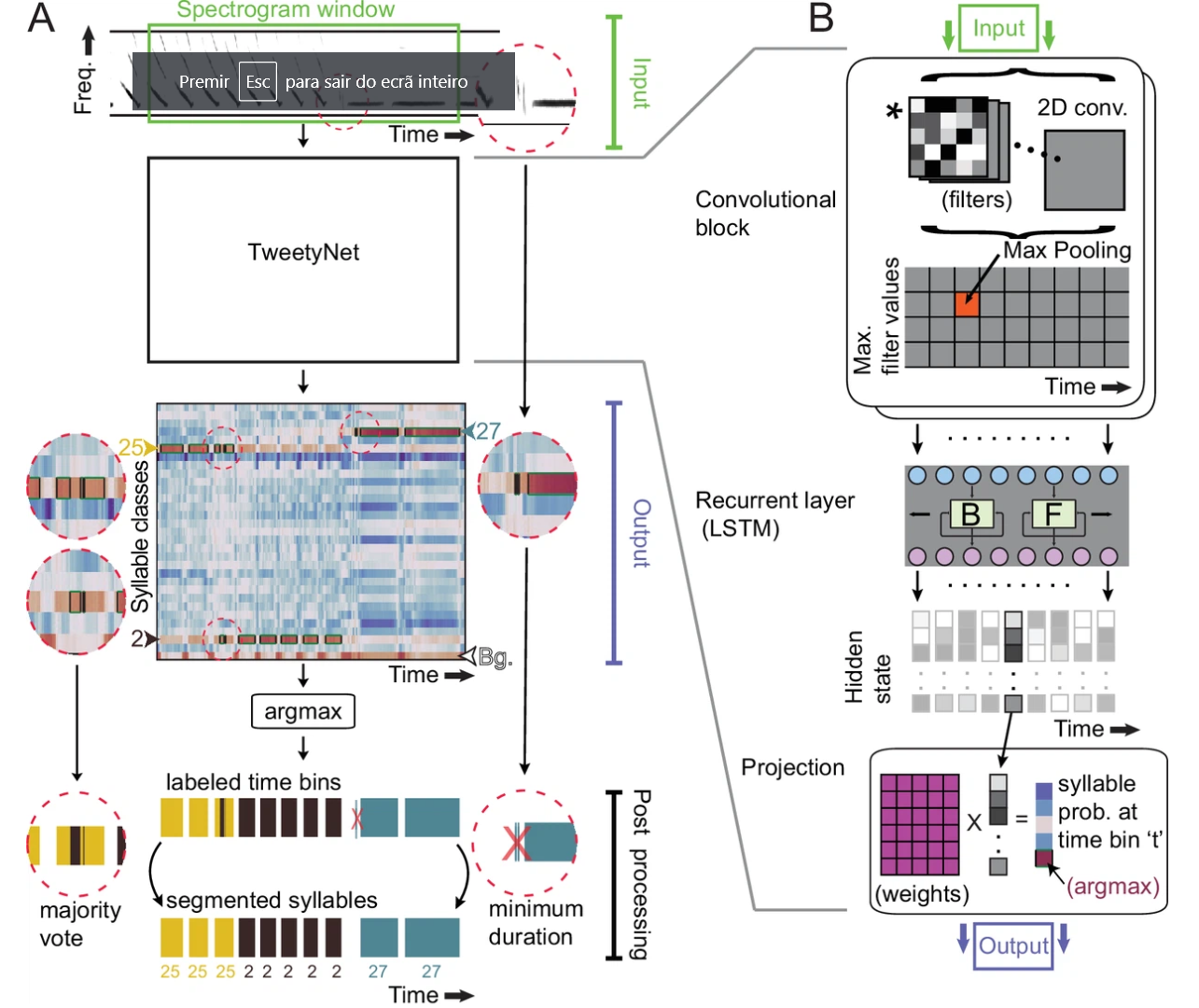

In this presentation, Tim delves into a self-supervised transformer model designed to automatically parse complex bird songs. Beginning with an exploration of supervised models replicating human annotations, the focus shifts to TweetyBERT, a groundbreaking model achieving comparable performance without human-labeled data.

This self-supervised transformer model belongs to the BERT family of large language models, which are trained to predict masked segments of a text sequence. TweetyBERT operates at a higher temporal resolution than previous models, such as HuBERT, which was developed for human speech. A significant challenge in applying large language models (LLMs) to animal vocalizations is tokenizing the sounds and effectively converting them into a "text" format.

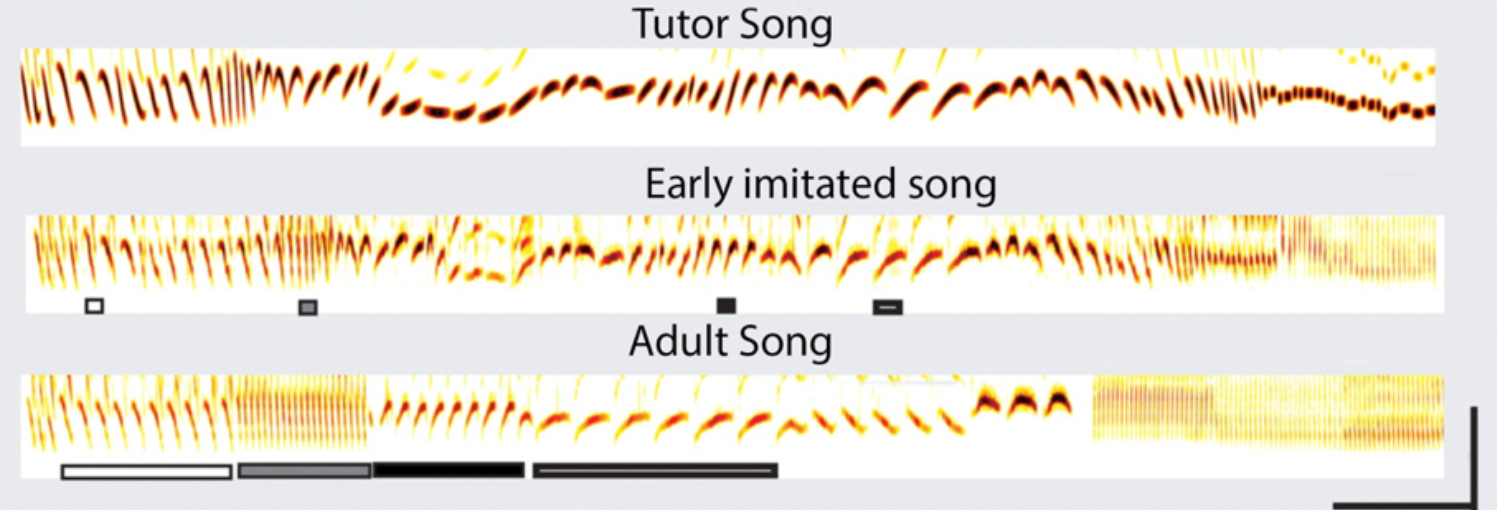

The model Tim will discuss bypasses the need for tokenization, working directly with sound represented as spectrograms. This self-supervised approach develops an internal representation of song syllables that aligns with the known biophysics of vocal production in canaries, and its syllable clusters correspond closely with human annotations. With the capability of automated clustering, researchers can now efficiently study the statistical structure of complex songs.

TweetyBERT reveals that long-range rules influence the sequence of canary songs: the selection of the subsequent phrase in a song is contingent on the song's history, with some syllables following specific rules that maintain correlations in the song over periods of up to ten seconds.

Large language models promise to advance our understanding of animal vocalizations rapidly. Specifically, for complex singers, these models are paving the way for new areas of research that were previously impractical to explore due to the prohibitive costs of manual song annotation.

About the speaker

Tim Gardner is an Associate Professor of Bioengineering at the Knight Campus in Oregon. He earned his PhD in 2003 from the Rockefeller University in Physics and Biology. In 2009, he became a faculty member at Boston University, where his lab focused on vocal learning in zebra finches. In 2016, he moved to San Francisco to join the founding team of Neuralink, where he worked on human brain-computer interfaces. After three years with Neuralink, Tim returned to working with songbirds, this time at the University of Oregon, with a focus on canaries and machine learning methods for animal vocalizations.